IMDA (Infocomm Media Development Authority, Singapore) developed AI Verify, a AI governance testing framework and software toolkit. The framework outlines 11 governance principles and aligns with international AI standards from the EU, US, and OECD. AI Verify helps organisations validate AI performance through standardised tests across principles such as transparency, explainability, reproducibility, safety, security, robustness, fairness, data governance, accountability, human agency, inclusive growth, and societal and environmental well-being.

AI Verify was developed in consultation with companies of different sizes and sectors — including AWS, DBS, Google, Meta, Microsoft, Singapore Airlines, NCS/LTA, Standard Chartered, UCARE.AI, and X0PA. It was released for international pilot in May 2022 and open-sourced in 2023, with more than 50 companies including Dell, Hitachi, and IBM participating.

With the rise of Generative AI (GenAI), the AI Verify testing framework has been enhanced to address its unique risks. It now supports testing for both Traditional and GenAI use cases.

AI Verify testing framework aims to help companies assess their AI systems against 11 internationally-recognised AI governance principles:

- Transparency

- Explainability

- Repeatability / Reproducibility

- Safety

- Security

- Robustness

- Fairness

- Data Governance

- Accountability

- Human Agency and Oversight

- Inclusive Growth, Societal and Environmental Well-being

WHO SHOULD USE THE FRAMEWORK?

AI System Owners / Developers looking to demonstrate their implementation of responsible AI governance practices

Internal Compliance Teams looking to ensure responsible AI practices have been implemented.

External Auditors looking to validate your clients’ implementation of responsible AI practices.

How to use it

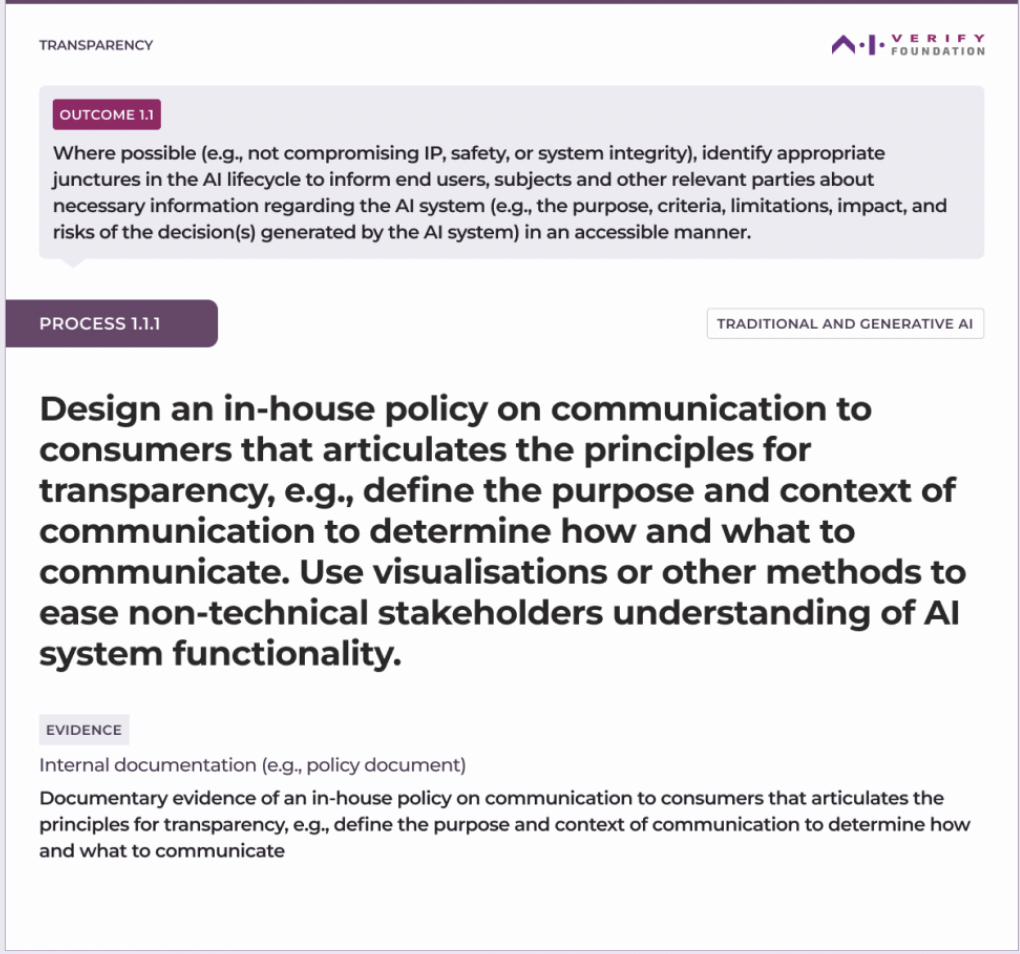

Each item in the checklist consists of:

OUTCOME

Describe the outcomes that you want to achieve for each principle.

PROCESS

Steps you need to take to achieve desired outcome.

EVIDENCE

Documentary evidence, quantitative and qualitative parameters that validate the process.

For each process, indicate if you have completed process checks and, if necessary, provide a detailed elaboration.

Download (PDF): AI governance testing framework

Source: Infocomm Media Development Authority, Singapore