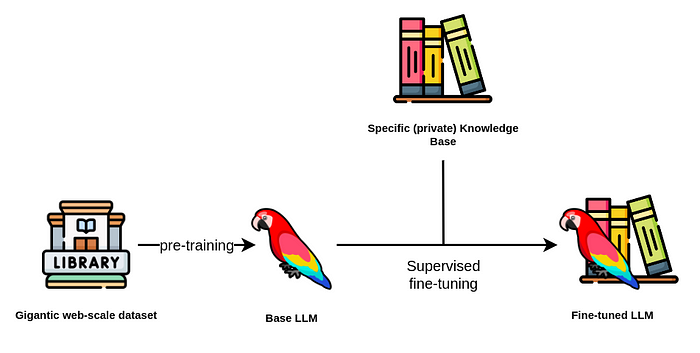

Artificial intelligence models have become incredibly powerful thanks to massive training datasets and billions of parameters. Yet, even the most advanced models often struggle when applied to specific business or industry tasks. Training a new model from scratch requires enormous computing power, data, and time. This is where fine tuning comes in.

Fine tuning allows developers to take a pre trained model that already understands general language or image patterns and make it smarter in a specific area. It is one of the most important techniques in modern AI development, helping organizations reduce costs, save time, and achieve better accuracy with less data.

What Is Model Fine Tuning

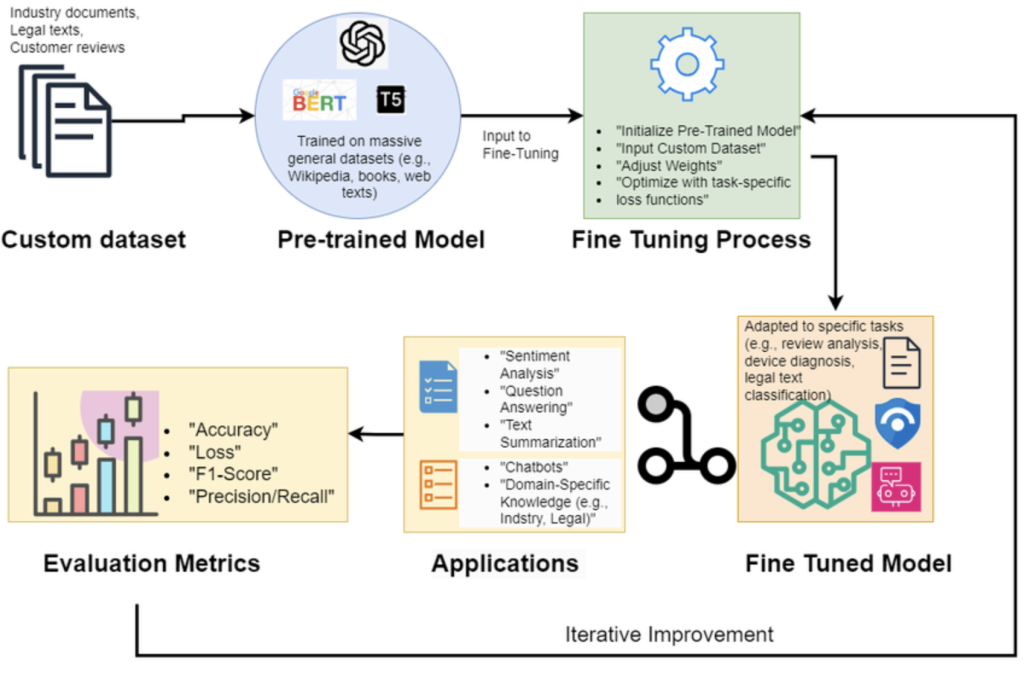

Fine tuning is the process of taking an existing pre trained model and continuing its training on a smaller, specialized dataset. Instead of starting from zero, the model builds upon what it already knows. This additional training helps it adapt to a particular domain such as healthcare, finance, or customer support.

For example, a large language model trained on billions of general web pages may not understand legal terminology well. By fine tuning it on legal contracts, the model can become highly proficient in understanding and generating legal text.

Fine tuning can also correct limitations of a base model. Many AI models have a knowledge cutoff, meaning they do not know information published after a certain date. Fine tuning with newer data can update their understanding. It can also help reduce hallucinations or incorrect outputs by exposing the model to cleaner, domain specific examples. Additionally, fine tuning allows developers to reduce unwanted bias by retraining the model on more balanced datasets.

Fine Tuning vs Training from Scratch

Training a model from scratch means building it entirely from the beginning using massive amounts of data and computing resources. It often takes months and millions of dollars. Fine tuning, on the other hand, uses a pre existing model as a foundation and retrains it on a smaller dataset relevant to a specific goal.

Fine tuning requires much less data, computation, and time, while still producing strong results. It is like customizing a pre built engine rather than designing a new one. The result is a model that combines general intelligence with specialized domain expertise.

Why Fine Tuning Matters

Fine tuning is crucial for making AI truly useful in real world scenarios. Most organizations do not need a general model that knows everything; they need one that performs exceptionally well within their business context.

Fine tuning delivers multiple benefits. It significantly improves data efficiency because it allows the use of small, focused datasets instead of massive generic ones. It enhances accuracy by aligning the model’s responses with the tone, terminology, and knowledge of a specific field. It can also reduce operational costs by requiring fewer resources than full retraining.

From a strategic perspective, fine tuning empowers businesses to create unique, differentiated AI products while maintaining control over their proprietary data.

Common Fine Tuning Techniques

There are several methods used to fine tune models depending on goals, resources, and model architecture.

Instruction fine tuning trains the model using example pairs of prompts and responses to teach it how to follow certain types of instructions more accurately.

Full fine tuning updates all of a model’s parameters. This can produce the best performance but is very resource intensive and requires powerful hardware.

Parameter efficient fine tuning, or PEFT, modifies only a small portion of the model’s parameters, such as in Low Rank Adaptation (LoRA). It greatly reduces computational cost while maintaining high accuracy.

Transfer learning involves taking knowledge from a general model and applying it to a related domain or task. This is ideal when there is limited domain data available.

Sequential fine tuning trains the model gradually in multiple stages, each focusing on increasingly specialized datasets. This helps the model retain general knowledge while learning domain specifics.

Multi task learning fine tunes a model using datasets that include several related tasks. This keeps the model versatile and capable of handling a range of objectives simultaneously.

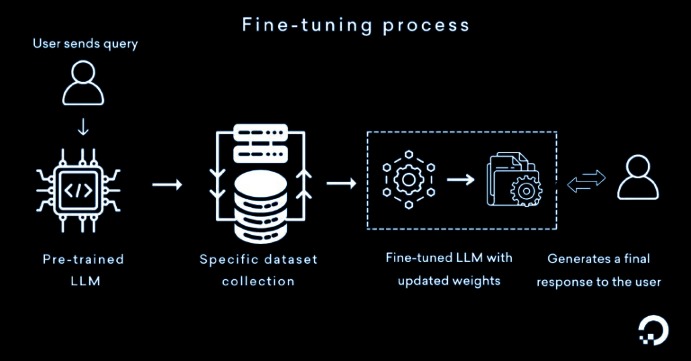

Steps in Fine Tuning an AI Model

Fine tuning typically follows several important stages.

Data preparation: Gather and clean the dataset. This can include structured data from databases or unstructured text from documents and websites. Cleaning, tokenizing, and balancing the dataset are essential for quality results.

Model initialization: Choose a suitable pre trained model that aligns with your task and load it into the development environment. Examples include models such as GPT, LLaMA, or Mistral.

Training configuration: Set hyperparameters such as learning rate, batch size, and number of epochs. Adjusting these properly ensures stable and efficient training.

Training and evaluation: Continue training the model using the prepared dataset. Evaluate its performance regularly using validation data to avoid overfitting.

Optimization: Apply techniques such as early stopping, dropout, or regularization to improve generalization.

Deployment: Once the model performs well, deploy it in a production environment using APIs or AI serving platforms.

Monitoring and maintenance: Track the model’s performance over time. Regularly fine tune it with fresh data to maintain accuracy as real world information evolves.

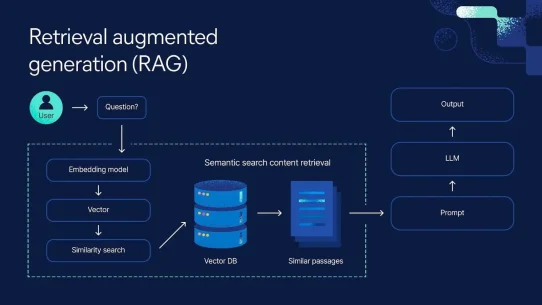

Fine Tuning vs Retrieval Augmented Generation (RAG)

Fine tuning and RAG are two different ways of improving AI model performance. Fine tuning changes the model’s internal weights through additional training, while RAG enhances a model’s responses by retrieving relevant documents from an external database during inference.

Fine tuning is ideal when you want the model to permanently learn domain knowledge. RAG is better for dynamically updating information without retraining the model. Many production systems actually combine both approaches.

Fine Tuning vs Prompt Tuning

Prompt tuning does not modify the model’s internal parameters. Instead, it focuses on optimizing how prompts are structured to guide the model toward better responses. Fine tuning changes the model itself, while prompt tuning changes only the inputs. Prompt tuning is faster and cheaper but less powerful for deep domain customization.

Challenges in Fine Tuning

While fine tuning is efficient, it is not without challenges. Poor data quality can lead to overfitting or biased results. Selecting the right hyperparameters is complex and often requires experimentation. Large models may also experience catastrophic forgetting, where they lose general knowledge while learning specialized information.

Another key consideration is cost and hardware availability. Even with PEFT, fine tuning large language models can require GPUs or TPUs with high memory capacity. Properly managing resources and setting realistic goals is essential for success.

Conclusion

Fine tuning represents one of the most powerful tools for bringing artificial intelligence into real world use. It bridges the gap between general pre trained models and the unique needs of specific industries or businesses. By adapting a foundation model to a particular domain, organizations can achieve better performance, reliability, and alignment without the expense of full model training.

When done correctly, fine tuning results in AI systems that are smarter, faster, and more relevant to human needs. For any company building intelligent solutions, understanding and applying fine tuning techniques is not just a technical advantage but a strategic necessity.